Agents

Basics of Agents

Start with definitions.

Agents, Environments, Percepts, and Actions

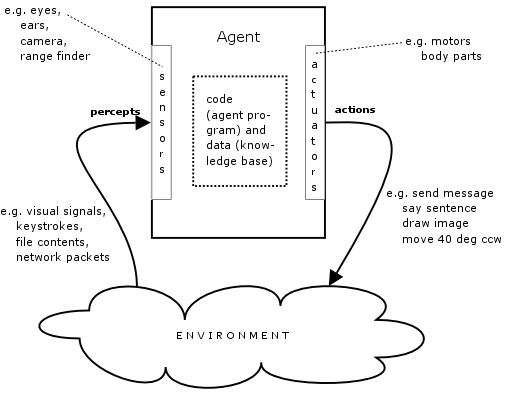

An agent is something that perceives its environment (by receiving percepts through sensors) and acts upon its environment (via actuators that produce actions).

An agent knows what actions it is performing, though it doesn’t necessarily know what effects they will have on the environment.

Agent Behavior and Implementation

The agent’s behavior is specified by an agent function, which maps the percept sequence to date to an action. The agent function can refer to knowledge of the environment.

type agent_function = percept list → action

The agent’s implementation consists of the hardware and the agent program (code) that implements the agent function.

The agent internally stores knowledge about the environment (data). Some knowledge can be pre-loaded by the agent designer, and some is computed over time from the percepts.

You can start an agent framework in Java like this:

/**

* Something that can think and act.

*/

public interface Agent<Percept, Action> {

/**

* Returns the action that the agent should do, given the (current) percept. An

* implementor of this interface will probably add the current percept into its

* internal knowledge base and thus be able to choose an action based on the

* current percept history.

*/

Action execute(Percept p);

}

public interface Percept {}

public interface Action {}

public interface Agent {

Action execute(Percept p);

}

Hint: Try writing various implementing classes, and see if the types

"get in your way." Do you think the problems with this approach

have something to do with why AI programming has traditionally been done

in dynamically typed languages, like Lisp and Python?

Here’s a Standard ML framework:

(*

* Anything that can sense and act.

*)

signature AGENT =

sig

type percept;

type action;

val execute: percept -> action;

end;

Agent Performance

How do we know if the agent function is a good one? We have to define a performance measure for it. A performance measure needs to be objective (not subjective) and defined externally.

The performance measure can be complex, with lots of variables, and consideration of rewards and penalties. Examples:

| Agent | Performance Measure Considerations |

|---|---|

| Refinery Controller | Add points for purity, throughput, capacity utilization; Subtract points for accidents, safety violations, pollution, downtime. |

| Aircraft Autolander | Add points for a safe landing; subtract points for accidents, taking too long, using too much fuel, damage to plane, harrowed passengers, landing on the wrong runway or at the wrong airport. |

Omniscience, Clairvoyance, Rationality, Autonomy

Important terminology helpful in agent design:

- Agents that are omniscient have infinite knowledge and know the actual outcomes of all actions and can perfectly maximize the performance. They are impossible to create for non-trivial environments, so we don’t consider them.

- Agents that are clairvoyant can perceive things by means other than sensory input, so we don’t consider them.

- Agents that are rational always choose the action that maximizes the expected value of the performance measure, using only the knowledge they currently have and the percept sequence taken in up to that time. Rationality requires information gathering and learning.

- A non-autonomous agent uses only its initial knowledge for decision making; an autonomous agent augments its knowledge from its percepts. Where initial knowledge can be incomplete, non-existent, or partially or wholly incorrect, autonomy is a good thing.

The Vacuum World Example

This is the first example in Russell and Norvig, enhanced with a few new actions:

- The agent is a (trivial) robotic vacuum cleaner

- It can be either in square A or B

- A square can be clean or dirty

- The agent can perceive only its current location and whether the current location is clean or dirty

- It can move or suck

- It has a power-save mode

- A good performance measure should say something about how clean it keeps the squares

The Four Possible Percepts

(A, clean)

(A, dirty)

(B, clean)

(B, dirty)

The Six Possible Actions

DoNothing

Right

Left

Suck

EnterPowerSaveMode

ExitPowerSaveMode

Considerations in Designing Performance Measures

- Amount of dirt sucked up? — not good in cases where the agent can keep dumping out dirt and sucking it back up

- We probably should penalize noise and power consumption — constantly moving back and forth when both squares are clean is wasteful.

Degree of Abstraction in the Environment

- Things not specified now: how and when do the squares get dirty? What if the bag fills up and explodes? What if the robot explodes or the environment is destroyed by fire, flood, or earthquake?

Java code for this environment and agent

/**

* An action that a vacuum agent can take.

*/

public enum VacuumAction {

DO_NOTHING, LEFT, RIGHT, SUCK, ENTER_POWER_SAVE_MODE, EXIT_POWER_SAVE_MODE;

}

/**

* A room in the vacuum world. There are only two rooms, called A and B. A room

* can be clean or dirty.

*/

public enum Room {

A, B;

public static enum Status {

CLEAN, DIRTY;

}

private Status status;

public Status getStatus() {

return status;

}

public void setStatus(Status status) {

this.status = status;

}

}

Task Environments

A task environment is the problem to which an agent is a solution.

Specified with PEAS

Performance

Environment

Actuators

Sensors

Examples of Task Environments

Here is a table for you to fill in. Use the textbook, the web, or common sense:

| Agent | Performance Measure |

Environment | Actuators | Sensors |

|---|---|---|---|---|

| Robot Vacuum Cleaner | |

|||

| Math Tutor | |

|||

| Theorem Proving Assistant | |

|||

| Mars Rover | |

|||

| Taxi Driver | |

|||

| Shopping Bot | |

|||

| Chess Playing Program | |

|||

| Go Playing Program | |

|||

| Medical Diagnostic System | |

|||

| Psychiatry Bot | |

|||

| Aircraft Autolander | |

|||

| Music Composer | |

|||

| Poetry Composer | |

|||

| Protein Finder | |

|||

| Postal Handwriting Scanner and Sorter | |

|||

| Real-Time Natural Language Translator | |

|||

| Essay Evaluator | |

|||

| Refinery Controller | |

Environment Categorization

Six dimensions from Russell and Norvig

- Fully observable — Partially observable

Do the sensors perceive the entire state of the environment?

- Deterministic — Strategic — Stochastic

Does the next state depend only on the current state and the agent’s action? (Strategic means deterministic except for the actions of other agents.)

- Episodic — Sequential

Does the current episode (sense-act pair) depend on the last one?

- Static — Semidynamic — Dynamic

Can the environment change while the agent is deliberating? (Semidynamic means environment stays the same but the agent’s performance measure decreases if thinking too long)

- Discrete — Continuous

Applies to the set of environment states, the set of percepts, the set of actions, the interpretation of time and space.

- Single-agent — Multi-agent

Need to know which other objects in the environment are agents or not. Multi-agent considerations: communication, cooperation, competition, randomizing behavior in competitive environments.

Here is another table for you to fill in (some of the slots don’t have cut and dry answers; discuss/debate these with your friends. Hey, maybe the environment is too vaguely specified.):

| Environment | Fully or Partially Observable | Deterministic or Stochastic (or Strategic) | Episodic or Sequential | Static or Dynamic (or Semi) | Discrete or Continuous | Single or Multi Agent |

|---|---|---|---|---|---|---|

| Soccer | ||||||

| Refinery Control | ||||||

| Vacuuming your Room | ||||||

| Chess (with a clock) | ||||||

| Natural Language Translation | ||||||

| Image Analysis | ||||||

| Shopping | ||||||

| Theorem Proving | ||||||

| Taxi Driving | ||||||

| Backgammon | ||||||

| Go | ||||||

| Weather Prediction | ||||||

| Essay Evaluation | ||||||

| Traffic Control | ||||||

| Microsurgery | ||||||

| Music Composition | ||||||

| Flight scheduling | ||||||

| Legal Advising | ||||||

| Internet Search | ||||||

| Handwriting Analysis | ||||||

| Medical Diagnosis | ||||||

| Cryptograms | ||||||

| Internet Packet Routing |

Agent Design

We can classify agents with four criteria

- Do they act by reflex, deliberate before acting, or do a bit of both?

- For partially observable environments, do they make models of the environment or just rely on the current percept and apriori knowledge

- How do they, if at all, attempt to maximize their performance?

- Can they learn or not?

Basic Agent Programs

Remember the agent function is implemented by an agent program. The agent program is often a subroutine that maps the current percept to an action. Some controller repeatedly calls this subroutine until the agent is dead. Such a subroutine is implemented like this:

- Take the current percept as input to the subroutine.

- Optionally, use information from this percept to augment your knowledge about the environment (that is, augment your model (the state) of the environment).

- Optionally, based on the updated state, and your hardwired knowledge of how your environment evolves and knowledge of how your actions are likely to affect it, compute some useful information to help you pick an action in the next step.

- Choose an action. The action may be chosen by several means:

- By reflex: consulting a list of rules of the form

If the state has property P then do action A - By using search or planning techniques to make progress toward a goal.

- By consulting a utility function that maps a state to a real number defining how happy the agent will be in that state.

- By reflex: consulting a list of rules of the form

Example: Reflex Vacuum Agent

/**

* The trivial vacuum agent.

*/

public class TrivialReflexVacuumAgent implements Agent<Room, VacuumAction> {

private boolean inPowerSaveMode = true;

/**

* Power up if not powered up, clean a dirty room if in one, move to the other

* room if the current room is clean.

*/

public VacuumAction execute(Room room) {

if (inPowerSaveMode) {

inPowerSaveMode = false;

return VacuumAction.EXIT_POWER_SAVE_MODE;

} else if (room.getStatus() == Room.Status.DIRTY) {

return VacuumAction.SUCK;

} else if (room == Room.A) {

return VacuumAction.RIGHT;

} else {

return VacuumAction.LEFT;

}

}

}

A test case

Missing content

Note that in this architecture the agents do not directly update the environment: the agent function simply returns the action it wants to do. Some controller outside the agent is repeatedly calling the agent’s execute() method and updating the environment. Of course it is possible to design completely different frameworks; for now, just understand this one.

In Standard ML...

(*

* The very trivial vacuum agent, implemented as a singleton.

*)

structure TrivialVacuumAgent : AGENT =

struct

datatype action = Left | Right | Suck | DoNothing | EnterPowerSaveMode

| ExitPowerSaveMode;

datatype square = A | B;

datatype status = Clean | Dirty;

type percept = square * status;

val inPowerSaveMode = true;

fun execute (location, state) =

if inPowerSaveMode then (inPowerSaveMode = false; ExitPowerSaveMode)

else if (state = Dirty) then Suck

else if location = A then Right

else (* location = B *) Left;

end;

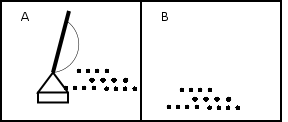

Problems with Pure Reflex Agents

But be careful with pure reflex agents in partially observable environments. (Note the inifinite loop with two clean squares.)

Learning Agents

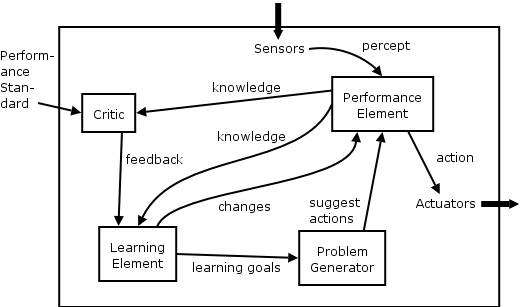

It’s often easier to build agents with little or no environmental knowledge and give them the ability to learn so as to improve performance. Conceptually, a learning agent looks like this (slightly modified from Figure 2.15 from Russell and Norvig):

The four components:

- Critic — evaluates how well the agent is doing wrt the external performance standard

- Learning Element — makes improvements

- Performance Element — contains the knowledge about the enviroment; selects the actions

- Problem Generator — suggests "actions that will lead to new and informative experiences"