Problem Solving

Definitions

- Problem Solving Agent

- An agent that tries to come up with a sequence of actions that will bring the environment into a desired state.

- Search

- The process of looking for such a sequence, involving a systematic exploration of alternative actions.

Searching is one of the classic areas of AI.

Problems

A problem is a tuple $(S, s, A, \rho, G, P)$ where

- $S$ is a set of states

- $s \in S$ is the initial state

- $A$ is a set of actions (sometimes called operators)

- $\rho: S \times A \rightarrow S$ is a partial function, that tells you for each state, which actions will take you to which states.

- $G: S \rightarrow \textrm{bool}$ is the goal test function, which tells you whether a state is a goal state or not (some people would be just as happy postulating a set of goal states)

- $P: S \times (A \times S)* \rightarrow \textrm{real}$ is the path cost function. A path is a sequence $[s_0 a_1 s_1 a_2 s_2 ... a_k s_k]$ such that $\forall i \in \{1..k\} \; \rho(s_{i-1}, a_i) = s_i$.

Example: A water jug problem

You have a two-gallon jug and a one-gallon jug; neither have any measuring marks on them at all. Initially both are empty. You need to get exactly one gallon into the two-gallon jug. Formally:

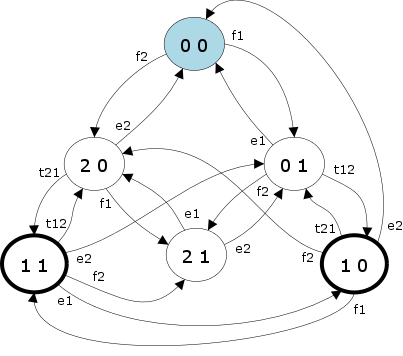

- $S = \{(0,0),(1,0),(2,0),(0,1),(1,1),(2,1)\}$ (or, if you prefer, $\{0,1,2\} × \{0,1\}$

- $s = (0,0)$

- $A = \{f2, f1, e2, e1, t21, t12\}$

- $\rho$ is given by the diagram and the table below

- $G = \lambda(x, y) . x=1$

- $P(p) = \textrm{length}(p)$ (the number of actions in the path)

A graphical view of the transition function (initial state shaded, goal states outlined bold):

And a tabular view:

| f2 | e2 | f1 | e1 | t21 | t12 | |

|---|---|---|---|---|---|---|

| (0,0) | (2,0) | — | (0,1) | — | — | — |

| (1,0) | (2,0) | (0,0) | (1,1) | — | (0,1) | — |

| (2,0) | — | (0,0) | (2,1) | — | (1,1) | — |

| (0,1) | (2,1) | — | — | (0,0) | — | (1,0) |

| (1,1) | (2,1) | (0,1) | — | (1,0) | — | (2,0) |

| (2,1) | — | (0,1) | — | (2,0) | — | — |

To solve this problem, an agent would start at the initial state and explore the state space by following links until it arrived in a goal state. A solution to the water jug problem is a path from the initial state to a goal state.

Example solutions

- $[f1, f2, e2, t12]$

- $[f1, e1, f2, t21, t12, f1, e2, t12]$

- $[f2, t21]$

There are an infinite number of solutions. Sometimes we are interested in the solution with the smallest path cost; more on this later.

- How many states are there?

- How many (legal) transitions are there?

- Solve the problem by hand

s(a, b, c) which returns the action sequence

necessary to get c gallons into the jug with capacity a, given that the

other jug has capacity b. Assume that a, b, and c are all positive

integers and a > b and a > c. Does this problem always have

a solution? If so, prove it; if not, give values for a, b, and c

meeting the above constraints for which no solution exists.Awww Man.... Why are we studying this?

There’s this thing called the Problem Space Hypothesis, due to Newell and Simon: All goal-oriented symbolic activity occurs in problem spaces. If they’re right, we’re spending time wisely.

Even if they’re not completely right, there are still zillions of problems that can be formulated in problem spaces, e.g.

| Problem | States | Actions |

|---|---|---|

| 8-puzzle | Tile configurations | Up, Down, Left, Right |

| 8-queens (incremental formulation) | Partial board configurations | Add queen, remove queen |

| 8-queens (complete-state formulation) | Board configurations | Move queen |

| TSP | Partial tours | Add next city, pop last city |

| Theorem Proving | Collection of known theorems | Rules of inference |

| Vacuum World | Current Location and status of all rooms | Left, Right, Suck |

| Road Navigation (Route Finding) | Intersections | Road segments |

| Internet Searching | Pages | Follow link |

| Counterfeit Coin Problem | A given weighing | Outcome of the weighing (less, equal, greater) |

Problem Types

State Finding vs. Action Sequence Finding

A fundamental distinction:

| Action Sequence Finding | State Finding |

|---|---|

| We know the state space in advance. We know which states are goals. We have to find the sequence of actions that get us to a goal state. The sequence may be contingent, or expressed as an AND-OR tree, but the actions matter. | We only know the properties that a goal state should have, but we don’t even know if any goal states exist. We just need to find a state that satisfies certain constraints! We don’t care what action sequence gets us there. |

| Optimality is concerned with "cheapest path" | Optimality is concerned with the "best state" |

| Examples: 8-puzzle, water jug, vacuum world, route navigation, games, many robotics problems | Examples: N-queens, integrated circuit layout, factory floor layout, job-shop scheduling, automatic programming, portfolio management, network optimization, most other kinds of optimization problems |

Offline vs. Online Problems

In an online problem, the agent doesn’t even know what the state space is, and has to build a model of it as it acts. In an offline problem, percepts don’t matter at all. An agent can figure out the entire action sequence before doing anything at all.

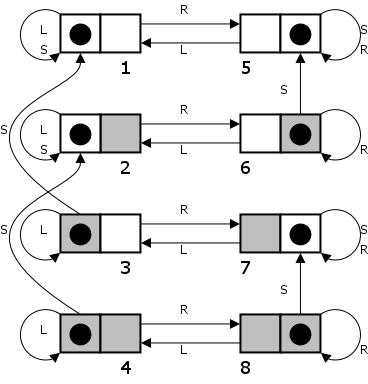

Offline Example: Vacuum World with two rooms, cleaning always works, a square once cleaned stays clean. States are 1 – 8, goal states are 1 and 5.

Sensorless (Conformant) Problems

The agent doesn’t know where it is. We can use belief states (sets of states that the agent might be in). Example from above deterministic, static, single-agent vacuum world:

| In State | Left | Right | Suck |

|---|---|---|---|

| 12345678 | 1234 | 5678 | 1257 |

| 1234 | 1234 | 5678 | 12 |

| 5678 | 1234 | 5678 | 57 |

| 1257 | 123 | 567 | 1257 |

| 12 | 12 | 56 | 12 |

| 57 | 13 | 57 | 57 |

| 123 | 123 | 567 | 12 |

| 567 | 123 | 567 | 57 |

| 56 | 12 | 56 | 5 |

| 13 | 13 | 57 | 1 |

| 5 | 1 | 5 | 5 |

| 1 | 1 | 5 | 1 |

Note the goal states are 1 and 5. If a state 15 was reachable, it would be a goal too.

Contingency Problems

Contingency Problem: The agent doesn’t know what effect its actions will have. This could be due to the environment being partially observable, or because of another agent. Ways to handle this:

- Solution is a list of conditional actions

- Interleave search and execution

Example: Partially observable vacuum world (meaning you don’t know the status of the other square) in which sucking in a clean square may make it dirty.

- No way to preplan a solution!!

- A solution starting in a state where you’re in the

left room and it’s dirty is:

Suck Right if dirty then Suck

Can also model contingency problems is with "AND-OR graphs".

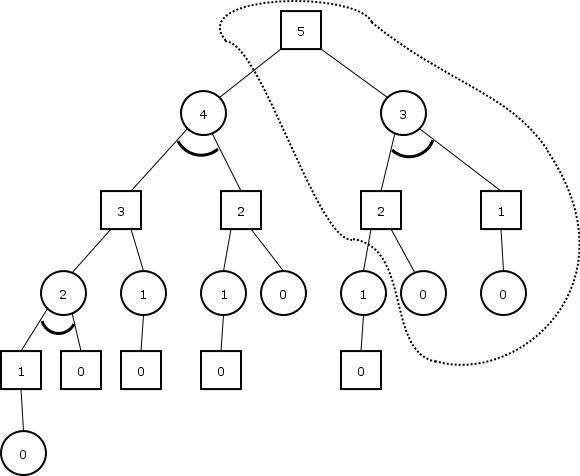

Example: find a winning strategy for Nim if there are only five stones in one row left. You are player square. You win if it is player circle’s turn with zero stones left.

In general then, a solution is a subtree in which

- The root node is in the subtree

- For every OR node in the subree, at least one child is in the subtree

- For every AND node in the subtree, all children are in the subtree

- All leaves are goal states

If the tree has only OR nodes, then the solution is just a path.

Search Algorithms

Strategies

Hey, we know what a problem is, what a problem space is, and even what a solution is, but how exactly do we search the space? Well there are zillions of approaches:

- breadth-first, uniform-cost

- depth-first, backtracking, depth-limited, depth-first iterative deepening

- backwards chaining, bidirectional search

- greedy best-first, A*, IDA*, RBFS, IE, MA*, SMA*

- hill-climbing (stochastic, first-choice, random-restart), random walk

- simulated annealing, beam search, genetic algorithms

- LRTA*

Types of Problem Solving Tasks

Agents may be asked to be

- Satisficing — find any solution

- Optimizing — find the best (cheapest) solution

- Semi-optimizing — find a solution close to the optimal

An algorithm is

- Complete, if will find a solution if one exists

- Optimal, if it finds the cheapest solution

Search Trees

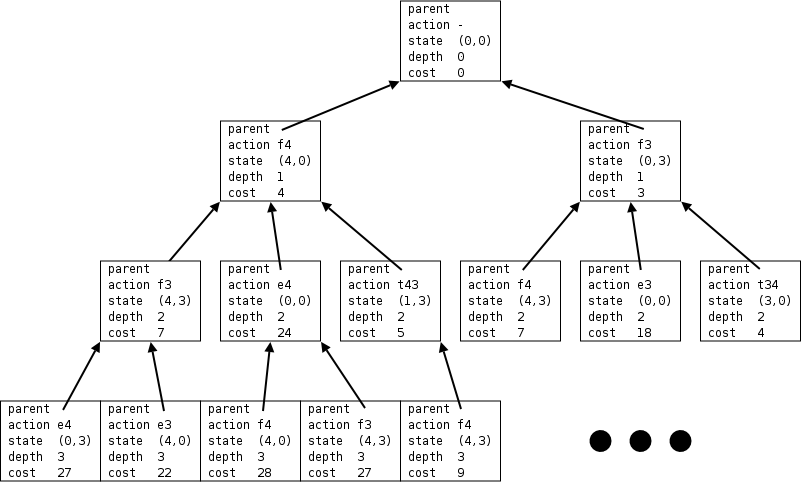

Search algorithms generate a search tree on the fly. Search trees contain nodes. Each node in the tree contains information about a particular path in the problem space. Nodes are not the same thing as states.

class Node {

State state;

Action action;

Node parent;

int depth;

int cost;

Node(State state, Action action, Node parent, int stepCost) {

this.state = state;

this.action = action;

this.parent = parent;

depth = parent == null ? 0 : parent.depth + 1;

cost = parent == null ? 0 : parent.cost + stepCost;

}

}

Example: The water jug problem with 4 and 3 gallon jugs. Cost is 1 point per gallon used when filling, 1 point to make a transfer, 5 points per gallon emptied (since it makes a mess). The search tree might start off like this:

- Generate

- Compute a new search tree node from its parent

- Expand

- Generate, from a node, all of its children

Search trees have

- Branching Factor ($b$)

- The average number of children of a node

- Depth ($d$)

- Height of the shortest solution subtree

- Max Depth ($m$)

- Maximum depth of the search tree (can be infinite)

The complexity of most search algorithms can be written as a function of one or more of $b$, $d$ and $m$.

Complexity

- The time complexity has to do with to the number of nodes generated.

- The space complexity has to do with the number of nodes that have to be stored (at a time)

In general though there may be more states than there are fundamental particles in the universe. But we need to find a solution. Usually is helpful to

- Find ways to identify large subsets of states that could never possibly be goal states so you don’t have to ever visit them.

- Don’t revisit states