AWS Basics

Unit Goals

What We Will Cover

This will be a technical introduction. We will not go through the history of AWS, the company, how much money it makes, who its clients are, and so on. That information is publicly available.

Let’s see how to use it.

Getting Started

There are many resources on the web for getting started and you should use those. We’ll assume you are all signed up.

Before building something, get to know what kind of services AWS can provide. As of April, 2019, AWS provides well over 100 services. Here are just a few of the popular ones:

| Category | Service | Description |

|---|---|---|

| Compute | EC2 | (Elastic Compute Cloud) Create and use (virtual) machines. |

| Lambda | Functions (Serverless) | |

| ECS | (Elastic Container Service) Manage Docker containers | |

| Fargate | Containers without worrying about servers | |

| Batch | Batch Jobs | |

| Lightsail | Virtual Private Servers, good for getting started | |

| Elastic Beanstalk | For running webapps | |

| Storage | S3 | (Simple Storage Service) Store objects in buckets |

| EBS | (Elastic Block Store) Block storage for EC2 instances | |

| EFS | (Elastic File System) Fully managed file sytem for EC2 | |

| Glacier | Low-cost archival storage | |

| Backup | Configure backup strategies | |

| Database | RDS | The standard relational database service |

| Aurora | High-performance, managed relational database | |

| DynamoDB | Managed NoSQL database | |

| DocumentDB | Managed Mongo-compatible document database | |

| ElastiCache | In-memory (key-value) caching system | |

| Redshift | Data warehouse | |

| Neptune | Managed graph database | |

| Timestream | Managed time series database | |

| Security, Identity, and Compliance | IAM | (Identity and Access Management) Identity management and roles for the AWS account |

| Cognito | Identity management for apps | |

| GuardDuty | Threat detection service | |

| Inspector | Application security analyzer | |

| Certificate Manager | SS:/TLS Certificates | |

| Firewall Manager | Manage firewall rules | |

| Secrets Manager | Manage (including rotatation of) secrets | |

| Shield | DDos protection | |

| WAF | Filters malicious traffic | |

| Networking and Content Delivery | VPC | Virtual Private Cloud |

| CloudFront | Global CDN | |

| Route 53 | DNS | |

| API Gateway | For API deployment | |

| ELB | Elastic Load Balancer | |

| Application Integration | SQS | Simple Queue Service |

| SNS | Simple Notification Service | |

| Machine Learning | SageMaker | Build, train, and deploy large ML models |

| Lex | Voice and chatbots | |

| Polly | Text to speech | |

| Transcribe | Speech recognition | |

| Rekognition | Image and text recognition and analysis | |

| Comprehend | Discover insights and relationships in text | |

| Translate | Language translation | |

| Textract | Extract text and data from documents | |

| Analytics | Athena | Query S3 |

| CloudSearch | Managed Search Service | |

| Elasticsearch | Manage Elasticsearch clusters | |

| Kinesis | Real-time data streams | |

| EMR | Elastic MapReduce | |

| Quicksight | Business Analytics Service | |

| Management and Governance | CloudFormation | Automate infrastructure creation |

| CloudWatch | Monitoring and logging | |

| CloudTrail | Track user activity and API usage |

Other categories: Developer Tools, Business Applications, Game Tech, Internet of Things, Media Services, Robotics, Blockchain, Customer Management, Mobile, End User Computing, and Satellite.

One other good thing to know right up front. There are three ways to interact with the resources in your AWS account:

- Via the AWS Management Console at console.aws.com. Provides GUI access to most services.

- Via the command line

- Programmatically, using libraries in a popular programming language.

We’ll introduce these as needed. But now let’s get to work.

Some Warmup Tasks

We’re not going to cover services and best practices and programming examples one-by-one; instead, we’re just going to do things and learn what we need along the way. And we’re not going to get very sophisticated; a useful tour of AWS would take hours and hours and hours.

Some of these tasks may cost real moneyYou will need to watch your costs. If you spin up resources, terminate them as soon as you finish with them to keep costs down.

Also, for simplicity, these notes will not say anything about best practices to control costs.

You are on your own here. You can read up on the AWS “Free Tier” and other Amazon docs for running as cheap as possible.

Are you in the LMU class?

Our institution is part of AWS Educate, so you can sign up under the institutional account and get $100 free. The usage is capped, so you still want to be careful, but you have more than enough credits to run through the tasks on this page.

These notes do not comprise an official tutorialYou won’t find any hand-holding here. Just a bunch of bullet points used to guide in-class demonstrations and classwork, so it will be really hard to follow on your own. And this lecture isn’t going on YouTube, so come to class if you’re interested in this stuff.

0. Setup

You shouldn’t really do anything unless you have a security mindset and understand a few basic things. So let’s get all set up.

- Your AWS account comes with a root user that has a user name and password. You should not use it, other than the very first time you log in. If this is your first time, or you haven’t made users, login with the root user.

- Go to the IAM service.

- Create an actual user. For the purposes of our in-class demos, AdministratorAccess is okay. Probably best to create a group first then add the user to it, but you can attach roles to a user directly.

- Make your

~/.aws/credentialsand~/.aws/configfiles. For simplicity, let’s use the default profile. Config file:[profile default] output = json region = us-east-1

Credentials file (paste the keys you get when you created the user):

[default] aws_access_key_id = xxxxxxxxxxxxxxxxxxxx aws_secret_access_key = xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

- Log out of the console so you are not the root user anymore.

- Log in using the user you just created. (You will have had to make a note of the signin link.)

- Install the awscli.

- Test your awscli installation.

$ aws help

1. Store some data in S3 and retrieve it

S3 stands for Simple Storage Service. It organizes your data into buckets. Each bucket has a globally unique name. Buckets store objects, which can basically be anything. Object names, called keys, can have slashes in them, making it look like your bucket is organized into folders.

- In the AWS console, go to the S3 service.

- Create a bucket. I called mine

programming-languages-logos; you will need to pick another name because bucket names must be universally unique. - Set its properties and access restrictions.

- Upload an image in the console. (I uploaded

ts-logo.png - Download the image in the console.

- On the command line, download your image with

$ aws s3api get-object --key=ts-logo.png --bucket=programming-languages-logos ts

This displays some JSON in the console describing the downloaded object, and writes the object itself to a file calledts. - Upload a new image on the command line

$ aws s3api put-object --key=go-logo.png --bucket=programming-languages-logos --body=go-logo.png

- In the AWS console, check that the new image is in the bucket.

- On the command line, list the contents of that bucket.

$ aws s3api list-objects --bucket=programming-languages-logos

- You can even use the command line to list all the buckets in your account

$ aws s3api list-buckets

- Write a Python program to list the contents of a bucket.

import sys import boto3 s3 = boto3.client('s3', 'us-east-1') for key in s3.list_objects(Bucket=sys.argv[1])['Contents']: print(key['Key'])$ python listbuckets.py programming-languages-logos go-logo.png ts-logo.png

- Write a Python program to generate a time-bombed URL to an image.

import sys import boto3 from botocore.client import Config s3 = boto3.client('s3', 'us-east-1', config=Config(signature_version='s3v4')) params = {'Bucket': sys.argv[1], 'Key': sys.argv[2]} print(s3.generate_presigned_url( ClientMethod='get_object', Params=params, ExpiresIn=60))$ python gets3url.py programming-languages-logos ts-logo.png https://programming-languages-logos.s3.amazonaws.com/ts-logo.png?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIA2ALKGBNDOQUSGWV5%2F20190502%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20190502T034922Z&X-Amz-Expires=60&X-Amz-SignedHeaders=host&X-Amz-Signature=940f5b0498ac06c2e7e0bc2a9bdbf742b5643f15281b7ff8e8bf6b12232746b1

- Try that link out in the browser, quickly. You should see the image.

- Wait 60 seconds and try the link again. The browser will show an Error document.

2. Spin up an EC2 Box and do some stuff

- In the AWS Console, go to the EC2 dashboard.

- Launch Instance (choose something “Free-tier eligible” — a t2.micro running Ubuntu should suffice).

- Click Review and Launch. On the Review and Launch Page, click on Edit Security Groups.

- Choose a name for your SG and add a rule for SSH, MyIP. You can use defaults for everything else (you will get a default VPC, etc.)

- Click Launch, which will prompt you for a key pair. It’s best practice for you to create your own key pair and use it, but you can, if you trust AWS, have it generate a key pair for you. If you do, make sure to chmod the private key file to 400 and put it in a safe place on your laptop.

- Launch

- View instances. Browse all the information on the console. How much do you recognize after a whole semester in the Networks class (and from your earlier Operating Systems class)?

- From your laptop command line, ssh into your box. (I don’t know how to do this on Windows, maybe you need PuTTY for all I know....) For me, it was:

$ ssh -i ~/.ssh/demo.pem ec2-user@ec2-54-197-13-218.compute-1.amazonaws.com

- You should be in, so create some folders and files, and run all your favorite command line Unix programs. You probably have Python installed. You can fetch and download Node and Ruby too. Or even an assembler!

- Have a friend try to ssh into your box. That should not work. If it does, that’s because you did not set up your security group correctly.

- Exit.

- If you like, from the EC2 console, terminate the instance. (If you don't, you will incur charges at some point.)

Aside: AWS has a lot of instance types. An example is t3.large where t is the type, 3 is the generation, and large is the size. Sizes are nano, micro, small, medium, large, xlarge, 2xlarge, and lot of different multiples on the xlarge. There are a few types, which are fun to try to remember:

| Type | Recent families | Mnemonic | Description |

|---|---|---|---|

| General Purpose | |||

| t | 2, 3, 3a | Tiny, Turbo | Burstable CPU (varying workloads). Good for webapps, microservices, test environments. Can get these for nano upto 2xlarge. |

| m | 4, 5, 5a, 5d, 5ad | Main | Good for general purpose enterprise apps, backend, servers, mid-size databases. Best for steadier workloads. Only comes in large and above. |

| a | 1 | ARM | Features custom-built AWS Graviton Processor. From medium to 4xlarge. Good for general purpose computing, containers, and development environments. |

| Compute Optimized | |||

| c | 4, 5, 5a, 5d, 5n | Compute | For compute-intensive tasks like batch processing, analytics, science and engineering, gaming, video-encoding. The n suffix means better for networking; these instances are good for enhanced networking, for more massively scalable games, etc. |

| Accelerated Computing | |||

| p | 2, 3, 3dn | Parallel | General purpose massively parallel computing with GPUs with thousands of cores. Good for machine learning, computational fluid dynamics, finance, seismic analysis, molecular modeling, genomics, speech recognition, drug discovery. From xlarge to 24xlarge and beyond. |

| g | 2, 3, 3s | Graphics | Optimized for graphics-intensive applications: 3D visualizations, grahics remote workstations, 3D rendering, application streaming, video encoding. |

| f | 1 | FPGA | Hardware acceleration through field programmable gate arrays (FPGAs). Good for genomics, financial analytics, real-time video processing, big data, security. |

| Memory Optimized | |||

| r | 4, 5, 5a, 5d | RAM | Optimized for memory-intensive applications, such as in-memory databases, data mining and analysis, in-memory caches, real-time processing of unstructured big data, Hadoop/Spark clusters, and big data analytics. |

| x | 1, 1e | ? | Really large in-memory databases, x1e 32xlarge has almost 4TB of memory. |

| z | 1d | ? | AWS made this instance to have ultra-fast 4.0GHz cores so their customers could reduce their costs of software such as EDA and databases that have per-core licensing costs. |

| Storage Optimized | |||

| i | 2, 3, 3en | I/O Per- formance |

Optimized for high IOPS with SSD storage. Good for NoSQL databases, scale-out transactional databases, data warehousing, Elasticsearch, analytics workloads. The en versions go up to 100Gbps networking. |

| h | 1 | HDD | Up to 16TB local HDD storage. Used for MapReduce, distributed file systems, network file systems, log or data processing applications, and big data workload clusters. |

| d | 2 | Dense | Up to 48TB local HDD storage. Used in Massively Parallel Processing (MPP) data warehousing, distributed computing, distributed file systems, network file systems, log processing. |

3. Deploy a static website with S3 and CloudFront

Let’s make a trivial hello world type site.

- The simplest way is to make a bucket that is really public. AWS will complain that you made the bucket public, but for right now let’s see that it works.

- Create the bucket with all those “block” and “remove” settings unchecked. AWS will warn you, but for now, go through with it.

- Open the Static Website Hosting box in properties, check it, and say you want index.html as the main page.

- Add the bucket policy from these instructions.

- Upload your little website.

- The URL that it lives at is in the Static Website Hosting box.

4. Write a serverless function with Lambda

5. Check your logs on CloudWatch

6. Create and use an RDS database

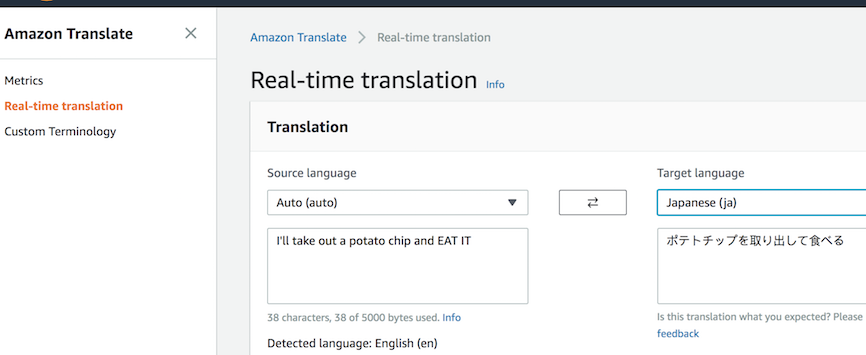

7. Use AWS Translate

You can use the console:

Or the command line:

$ aws translate translate-text --source-language-code=en --target-language-code=es --text='Hello, world!'

{

"TranslatedText": "¡Hola, mundo!",

"SourceLanguageCode": "en",

"TargetLanguageCode": "es"

}

Or you can do it from Python also:

import sys

import boto3

client = boto3.client('translate')

response = client.translate_text(

SourceLanguageCode=sys.argv[1],

TargetLanguageCode=sys.argv[2],

Text=sys.argv[3])

print(response.get('TranslatedText', response))

$ python translate.py en de 'Where is the train station?' Wo ist der Bahnhof? $ python translate.py en ja "Those who break the rules are scum, that's true, but those who abandon their friends are worse than scum." ルールを破る人はスカムですが、それは本当ですが、友人を放棄する人はスカムよりも悪いです。

Building an Infrastructure

TODO - sample architecture, CloudFormation, All the security concerns...

Summary

We’ve covered:

- How one can get started with AWS

- Deploying a trivial webapp

- The categories of services

- Using a few of the popular services

- Infrastructure concerns