Code Improvement

Motivation and Goals

We want a compiler to produce good code, at least we usually do. We may wish to “optimize” one or more of:

- Program size

- Program speed

- Resource usage (page swaps, caches, file handles, threads, etc.)

General optimization is undecidable, and many specific optimization tasks happen to take provably exponential time or are NP-Hard. So we normally speak of code improvement.

The general rule is to do those things that give the most improvement for the least amount of work, so for instance, you might see compilers that don’t bother doing any improvement outside loops. Still, there are different degrees of optimization aggressiveness:

- No improvements at all

- Only few basic simplifications on AST nodes or tuples (peephole optimization)

- Attempt near-optimal code within basic blocks (by removing redundancies like common subexpressions, doing smart instruction scheduling and register allocation, etc.)

- Intraprocedural optimization (improving across basic blocks, but still within a function, e.g., loop optimization using data flow analysis)

- Interprocedural optimization

Ideally, optimizations should satisfy two criteria:

SAFETY

The optimized code does the “same thing” as before

PROFITABILITY

The optimized code is actually smaller or faster

but as we’ll see, unsafe and unprofitable optimizations are sometimes done in practice.

Where Can Optimization Be Done?

There is no single optimization phase in a compiler. Lots of things happen to improve code:

- The programmer needs to find a better algorithm. Not many (if any) compilers can turn bubblesort into quicksort.

- The intermediate code generator can be smart about how it generates intermediate code.

- The compiler can make a pass or two or three over the intermediate code to improve it.

- The code generator can be smart, with clever instruction selection, by employing register tracking, etc.

- The compiler can make a pass or two or three over the target code to improve it.

- The run time system can adapt to the way a program is running, possibly recompiling and relinking on the fly.

- The programmer profiles the running code and finds out where the memory leaks and bottlenecks and excessive resource consumption are occurring, and rewrites the code accordingly.

Optimization Strategies

In general, you’ll try to:

- Use cheaper, more efficient operations

- Reuse results rather than recompute them

- Maximize the use of registers, caches, and memory

- Reduce the amount of code (e.g., remove dead and useless code)

- Reduce conditional jumps (they make branch prediction difficult)

- Optimize the use of pipelines and cores

But note the need for tradeoffs! You can get rid of some jumps by unrolling loops, but this makes more code.

A Catalog of Optimization Techniques

Machine Independent Optimizations

- Constant Folding

- Algebraic Simplification (e.g. operand reordering)

- Strength Reduction

- Unreachable Code Elimination

- Dead Code Elimination

- Copy Propagation

- Common Subexpression Elimination

- Loop Unrolling

- Loop Fission

- Loop Fusion

- Loop Inversion

- Loop Interchange

- Loop Invariant Factoring

- Jump Threading

- Induction Variable Simplification

- Tail Recursion Elimination

- Inline Expansion

Mostly Machine Independent Optimizations

- Efficient address computation

- Simplified stack frames

- Static allocation of frames

- Inlining

Machine Dependent Optimizations

- Careful instruction selection, using an understanding of caches, pipelines, branch prediction algorithms, scheduling rules, alignment requirements

- Smart register allocation

- Smart use of address modes

- Balancing across multiple CPUs

Links

- Compiler Optimization at Wikipedia

- Wikipedia’s Compiler Optimizations Category Page

- Agner Fog’s Software Optimization Resources

- A pretty good reference

Examples

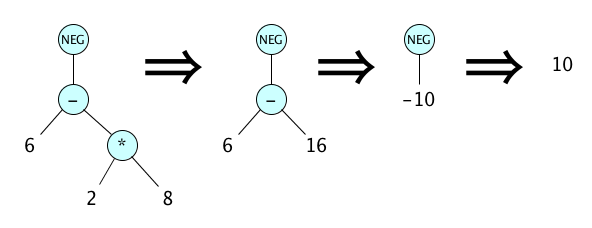

Constant Folding

Why wait until the target program is run to do simple computations? Let the compiler do it. Example:

Remember we can always write trees as strings, so we’ll do that from now on:

| (NEG (- 6 (* 2 8))) | (NEG (- 6 16)) | (NEG -10) | 10 |

Strength Reduction

You can replace expensive operations with cheaper ones:

| (+ x 0) | x |

| (- x 0) | x |

| (- x x) | 0 |

| (- 0 x) | (NEG x) |

| (+ x (NEG y)) | (- x y) |

| (- x (NEG y)) | (+ x y) |

| (* x 0) | 0 |

| (* x 1) | x |

| (/ x 1) | x |

| (/ x x) | 1 |

| (% x 1) | 0 |

| (% x x) | 0 |

| (/ 0 x) | 0 |

| (% 0 x) | 0 |

| (< x x) | false -- also for > and NOTEQ |

| (EQ x x) | true -- also for <= and >= |

| (AND x false) | false |

| (OR x true) | true |

| (NOT <= x y) | (> x y) |

| (>= (ABS x) 0) | true |

| (* x 4) | (SHL x 2) |

| (/ x 4) | (SHR x 2) -- arithmetic right shift |

You can even “invent” some powerful operations:

| (+ (* x 90) y) | (MULADD x 90 y) |

| (AND (<= x 3) (>= x -10)) | (INRANGE x -10 3) |

By “inventing” powerful operations, we mean creating new AST nodes representing operations that may not appear in your source language. That is, a language may not have INC, MULADD, INRANGE or a host of other operators, but during optimization there is nothing wrong with adding these new nodes! In fact, some can be created for the sole purpose of allowing better code to be generated.

Operand Reordering

Use commutativity, associativity, and distributivity to either reduce the number of operations, or do things like ”put the more complex code on the left:”

| (+ x (- y z)) | (+ (- y z) x) -- commutativity |

| (* x (* y z)) | (* (* y z) x) -- associativity |

| (- (* x 5) (* y 5)) | (* (- x y) 5) -- distributivity |

Complex on the left simplifies register allocation on some machines.

But watch out: associativity and distributivity changes may not preserve overflow semantics.

$ swift

1> let x: Int8 = 70

x: Int8 = 70

2> let y: Int8 = 80

y: Int8 = 80

3> let z: Int8 = 30

z: Int8 = 30

5> (x + (y - z))

$R0: Int8 = 120

4> (x + y) - z

Execution interrupted. Enter code to recover and continue.

Enter LLDB commands to investigate (type :help for assistance.)

Assignment Simplification

As long as assignment doesn’t have hidden side-effects, you can do things like this:

| (ASSIGN x x) | (NOP) |

| (ASSIGN x (+ x 1)) | (INC x) |

| (ASSIGN x (- x 1)) | (DEC x) |

| (ASSIGN x (+ x y)) | (+= x y) |

Beware of languages that allow for side effects or triggers to take place on assignment. For example Ada allows assignment triggers, and C++ allows you to overload the assignment operator. The optimzations above prevent those effects and triggers, hence those optimizations are strictly speaking unsafe.

Unreachable Code Elimination

If you can’t ever get to some code, don’t generate it. With trees:

| (IF true T1 T2) | T1 |

| (IF false T1 T2) | T2 |

| (BLOCK T1 T2 RETURN T3 T4) | (BLOCK T1 T2 RETURN) |

| (WHILE false T) | (NOP) |

| (FOR i emptyrange T) | (NOP) |

Other general strategies:

- Remove all tuples after an unconditional control transfer (JUMP, RETURN, THROW), up to, but not including, the next LABEL.

- Remove all basic blocks with no predecessors

- Remove all private functions or methods that are never called

Dead Code Elimination

If a local variable or by-value parameter is assigned to but never subsequently used, you can delete the assignment. As usual, do this only if no implicit operations have side effects, and watch out for aliasing.

void f() {

int p = 5;

int* q = &p;

p = 3; // LOOKS LIKE DEAD CODE BUT IT IS NOT!!

cout << *q;

}

Copy Propagation

After an assignment x := y (i.e., (COPY y x)), replace all uses of x with y until such point that the values of x or y could be different. This might turn the copy into dead code which you can eliminate. Given the source code:

w := 4; a = w - 7 + (z * w)

then optimizing the AST proceeds as follows:

| (BLOCK (ASSIGN w 4) (ASSIGN a (+ (- w 7) (* z w)) ) | (ASSIGN a (+ (- 4 7) (* z 4))) | (ASSIGN a (+ (- 3) (* z 4)) | (ASSIGN a (- (* z 4) 3)) |

And with Tuples:

| (COPY,4,w) (MUL,z,w,t1) (SUB,w,7,t2) (ADD,t2,t1,a) | (MUL,z,4,t1) (SUB,4,7,t2) (ADD,t2,t1,a) | (MUL,z,4,t1) (COPY,-3,t2) (ADD,t2,t1,a) | (MUL,z,4,t1) (ADD,-3,t1,a) | (MUL,z,4,t1) (SUB,t,3,a) |

Common Subexpression Elimination

If an expression appears multiple times, AND ITS VALUE CANNOT CHANGE IN BETWEEN THESE EVALUATIONS, then you can just evaluate it once:

| x * 3 + x * 3 + 10 | _t = x * 3; _t + _t + 10; | |

| a[i][j] = a[i][j] + 1 | _t = &a[i][j]; (*_t)++; | |

| x = a[i]; a[i] = a[j]; a[j] = x; | _t0 = &a[i]; x = *_t0; _t1 = &a[j]; *_t0 = *_t1; *_t1 = x; | --After CP and DCE _t0 = &a[i]; _t1 = &a[j]; *_t0 = *_t1; *_t1 = *_t0; |

Expressions that look common may not be, due to pointers, aliasing, references, arrays, etc.x = a[i] - 85; a[j] = 21; // a[i] is NOT common print(a[i]); // ... because maybe i == jIf your language has references, be very carefulx = j * 8 - y; q = 10; // Make sure you never had done c = j * 8 - y // ... something like int& q = j

Conditional Jump Tuple Compression

A naive translation into tuples for:

if (x < 3) { ... }

can be optimized like so:

| (LT, x, 3, t0) (JZ, t0, L3) | (JGE, x, 3, L3) |

This can be very useful since the naive translation of the first tuple sequence to x86 assembly would be:

cmp x, 3 setl dl movzx eax, dl test eax, eax jz L3

and for the second:

cmp x, 3 jg3 L3

Loop Unrolling

Loops can have a performance issue, since time is required to execute those jumps, and conditional jumps can cause slowdowns because of the potential for branch misprediction.

| for i in 1..4 { T } | i = 1; T; i = 2; T; i = 3; T; i = 4; T; |

| (FOR i (RANGE 1 4) T) | (BLOCK (ASSIGN I 1) T (ASSIGN I 2) T (ASSIGN I 3) T (ASSIGN I 4) T ) |

Loop unrolling is commonly followed by copy propagation!

| for i in 1...4 { print(i * 2) } | i = 1; print(i * 2); i = 2; print(i * 2); i = 3; print(i * 2); i = 4; print(i * 2); | print(1 * 2); print(2 * 2); print(3 * 2); print(4 * 2); | print(2); print(4); print(6); print(8); |

Look unrolling might conflict with cache issues, since the code is getting bigger.

Note: you can partially unroll, that works too.

Loop Fusion

Suppose you know that you had an array of structs:

struct {

int a;

int b;

} s[5000];

then two loops accessing the a’s and b’s independently could be fused into one to improve locality (and avoid destroying performance due to cache unloading and refilling:

| for i in 0..<n { ... s.a[i] ... } for i in 0..<n { ... s.b[i] ... } | for i in 0..<n { ... s.a[i] ... ... s.b[i] ... } |

Loop Fission

Suppose you know that you had two parallel arrays in a struct:

struct {

int a[5000];

int b[5000];

} s;

then one loop that iterated both array could be split into two loops accessing the a’s and b’s independently to improve locality (and avoid destroying performance due to cache unloading and refilling:

| for i in 0..<n { ... s.a[i] ... ... s.b[i] ... } | for i in 0..<n { ... s.a[i] ... } for i in 0..<n { ... s.b[i] ... } |

Loop Inversion

This interesting technique replaces a while loop with a do-while loop in an if statement, looking like this at the source code view (BUT DON’T REALLY DO THIS IN YOUR SOURCE CODE, LET THE COMPILER DO IT):

| while (condition) { body } | if (condition) { do { body } while (condition); } |

This tends to generate better machine code, because there are fewer jumps. Here it is in tuples:

| top: condition (JZ, bottom) body (JUMP top) bottom: ... | condition (JZ, bottom) top: body condition (JNZ, top) bottom: ... |

The last iteration of the loop has two jumps not taken in the optimized version compared to the unoptimized version. Pretty useful if this is a nested loop!

| For a loop executed n times |

Unoptimized Version | Optimized Version | |||

|---|---|---|---|---|---|

| Conditional Jumps | Unconditional Jumps Taken |

Conditional Jumps | |||

| Taken | Fallen Through |

Taken | Fallen Through |

||

| 0 | 1 | 0 | 0 | 1 | 0 |

| 1 | 1 | 1 | 1 | 0 | 2 |

| 2 | 1 | 2 | 2 | 1 | 2 |

| 3 | 1 | 3 | 3 | 2 | 2 |

| 4 | 1 | 4 | 4 | 3 | 2 |

| 5 | 1 | 5 | 5 | 4 | 2 |

| 100 | 1 | 100 | 100 | 99 | 2 |

Remember these are *compiler* optimizations

Don’t use the examples on this page as an example of how you, as a programmer, should be writing source code. The page discusses optimizations that a compiler can do for you. You (the programmer) are supposed to write clear, readable code. Let the compiler optimize. It’s generally much better at that you are.

Loop Interchange

If you know that your matrices are laid out in row-major (as in C) or column-major (as in Fortran) order, and you detect that a matrix is being processed with nested loops in a manner that is opposite from the layout, and you can tell that the body of the loop is not modifying the matrix in any way dependent on the traversal order, you can switch the traversal order. Here is an example of switching from row order to column order:

| for i in 0..<m { for j in 0..<n { ... a[i, j] ... } } | for j in 0..<n { for i in 0..<m { ... a[i, j] ... } } |

Loop Invariant Factoring

A loop invariant is an expression that is always the same for every execution of the loop. You might consider moving the evaluation of this expression just outside (just before) the loop, so that it evaluated only once. Example:

| for i in 0..<n { for j in 0..<n { for k in 0..<n { a[i][j][k] = i * j * k } } } | for i in 0..<n { _t0 = a[i] for j in 0..<n { _t1 = _t0[j] _t2 = i * j for k in 0..<n { _t1[k] = _t2 * k } } } |

If t = 100, for example then:

- Without factoring: 3,000,000 array accesses and 2,000,000 multiplications

- With factoring: 1,010,100 array accesses and 1,010,000 multiplications

Factoring may be unsafe

You may factor an expression that throws an exception (or crashes), and if it does the exception would be thrown at the wrong place, perhaps before a side-effect which could completely change the program meaning.

A good compiler will only factor expressions it knows cannot throw.

Factoring may be unprofitable as well as unsafe

Factoring an invariant from a loop that is executed zero times causes unnecessary and potentially harmful execution of code that should not ever have been run.

Jump Threading

Sometimes a jump will land on a jump (or a throw or a return). Examples (for tuples):

| (JGE, x, 3, L5) some code L5: (JUMP, L7) | (JGE, x, 3, L7) some code L5: (JUMP, L7) |

| (JGE, x, 3, L5) some code L5: (JGE, x, 2, L7) | (JGE, x, 3, L7) some code L5: (JGE, x, 2, L7) |

Here’s a nice Jump Threading article.

The basic idea of jump threading is to avoid conditional jumps that you know will always be taken or never be taken, and make sure they are never executed. The same theme appears in transformations such as these (at the AST level):

| (BLOCK (IF c T1) (IF c T2)) | (IF c (BLOCK T1 T2)) |

| (BLOCK (IF c T1) (IF (NOT c) T2)) | (IF c T1 T2) |

Again, only perform these if you can prove execution of T1 will not affect c.

Induction Variable Simplification

An induction variable is any variable whose value is incremented by a constant each pass through the loop.

An induction expression has the form i*c1+c2 for induction variable i and invariants c1 and c2. You can get rid of the multiplication that it is the loop, replacing it with a much cheaper addition! Here’s an example:

| for i in 1...n { a[i] = 80 * i + q } | _t = 80 + q for i in 1...n { a[i] = _t _t = _t + 80 } |

In general, replace induction expression i*c1+c2 by initializing a new variable to i0*c1+c2 and incrementing by s*c1 where s is the step size

Tail Recursion Elimination

A function that returns a call to itself can be transformed into a loop. The classic gcd example is:

| function gcd(x, y) { if (y === 0) { return x; } return gcd(y, x % y); } | function gcd(x, y) { while (true) { if (y === 0) { return x; } [x, y] = [y, x % y]; continue; } } |

The continue is emitted just in case there is more code after the return, but of course if the optimizer can tell there is no code there, then it is unnecessary. Be careful that the return is not inside a nested loop!

With tuples, the transformation is like:

| (JNZ, y, L2) (RET, x) (LABEL, L2) (STARTCALL, gcd) (PARAM, y) (MOD, x, y, t0) (PARAM, t0) (CALL, gcd, t1) (RET, t1) | (LABEL, L1) -- add this new label at the top (JNZ, y, L2) (RET, x) (LABEL, L2) (COPY, y, t0) -- compute new first param (MOD, x, y, t1) -- compute new second param (COPY, t0, x) -- load new first param (COPY, t1, y) -- load new second param (JUMP, L1) -- "call" by jumping to top |

For fun, here are the tail recursion elimination applications of Factorial (remember a should be primed with 1 here):

| function fact(n, a) { if (n === 0) { return a; } return fact(n - 1, a * n); } | function fact(n, a) { while (true) { if (n === 0) { return a; } [n, a] = [n - 1, a * n]; continue; } } |

and Fibonacci (remember a and b should be primed with 0 and 1):

| function fib(n, a, b) { if (n === 0) { return a; } return fib(n - 1, b, a + b) } | function fib(n, a, b) { while (true) { if (n === 0) { return a; } [n, a, b] = [n - 1, b, a + b]; continue; } } |

Inline Expansion

Inlining saves the overhead of a function call at runtime. In practice it often exposes many other optimizations. Here’s a trivial example to show the basic idea, where we inline the body of g into the point in f where it is called. (If this is the only call to g, or we inline all calls to g, then we don’t have to generate a separate function for it at all):

| function g(x, y) { let z = x * y; console.log(z / 5); return z + 1; } function f(x) { if (x > 0) { console.log(g(10, x)); } return 1; } | function f(x) { if (x > 0) { let __g_x = 10; let __g_y = x; let __g_z = __g_x * __g_y; console.log(__g_z / 5); let __g_returnValue = __g_z + 1; console.log(__g__returnValue); } return 1; |

You should inline when the function is:

- Called only once in the program text

- Extremely small. Obvious candidates are those ridiculous getter methods like

int getX() { return x; }in Java and C++.

You should strongly consider inlining when the function is:

- Frequently called at runtime (i.e., called in a loop)

You probably don’t want to inline

- Recursive functions, but you can partially expand them (kind of like partial loop unrolling)

Further Reading

Nothing wrong with starting at the Wikipedia page for compiler optimizations and clicking from technique to technique to technique and getting lost and absorbed in this incredibly cool subdiscipline of compilers.

Thinking about these topics is good. It might help you see computation in a new way.

Also, read whatever you can about Fran Allen, the 2006 Turing Award winner who did so much of the seminal work in optimizing compilers.

Summary

We’ve covered:

- Motivation

- Where optimizations happen in a compiler

- What we try to optimize

- A whole bunch of techniques

- Where to learn more