Software Security

Secure Software

We can come up with several dimensions of security:

We have to practice security in hardware, in software, and at the human level. We can employ technical solutions such as passwords, tokens, encryption, access control lists, permission matrices, antivirus software, firewalls, whitelists, blacklists, and security zones. We can train users to be security conscious.

But did you know that malicious actors can often get around many of these technical security “solutions” and do way more damage just by exploiting poorly crafted and buggy code? Because that's where 90% of reported security incidents come from. NINETY. PERCENT.

Secure Software

A lot of people think that security is mostly about defenses such as those mentioned above. But attackers can get around those! Software security considers the code: it is about disciplined software design and development. Build the software right the first time: Prevention is the thing to shoot for; detection and reaction are more expensive.

The field of secure software development is concerned with the software development lifecycle (requirements definition, analysis, design, development, testing, configuration, deployment, maintenance), and security concerns in each phase, namely:

- How to design secure software, i.e., the principles to follow (simplicity, failing fast, setting trust boundaries), how to define security requirements (not always easy), how to do threat modeling, risk analysis, and employing techniques like domain-driven design

- The coding constructs supporting secure software, like immutability, encapsulation, error isolation, validation

- Checking code with linters, static analysis tools, and (human) code reviews

- Testing, specifically unit testing, integration testing, penetration testing, fuzz testing

- Operations, such as configuration, deployment, monitoring, disaster recovery

And of course, knowledge of known vulnerabilities and classes of attacks (injection, denial-of-service, out-of-range values, buffer overflows) are important too. We’ll need to learn about:

- Low-level, memory-based attacks, often done by overflowing (overwriting or overreading!) buffers on the stack or heap, or exploiting integer overflow. (Buffers are blocks of memory allocated for accepting input, perhaps from a network request or file read).These can be done by messing with format or regex patterns, too, or via all kinds of shenanigans with pointer arithmetic. In addition to overflows, low-level attacks can be carried out dangling pointers or even variable format strings.

- System security, such as on the World Wide Web or any distributed system. The web is everywhere. Even mobile apps make, you guessed it, make web service calls.

The Software Security Mindset

There are three big ideas that comprise the proper mindset for successful secure software development:

Build Security In

Define Security Requirements Properly

Defend Deeply and Broadly

Build Security In

Security concerns must always be on your mind in everything you do. It is not separate from software development. It is an integral part of software development. Why?

- If you’re the developer, you know everything about the system, so you’ll know how to defend.

- Secure software practices catch many vulnerabilities that you might not even know about (and will never encounter, because they won’t ever happen because your code is so good).

- Building secure domain objects and secure modules will generally completely replace tons of ad-hoc defenses. Create the right software. Manipulate the right entities from the right domain with the right constraints. Entities should do only what they are intended to do and no more. They should take on only sensible values. Secure software is better than security software, as they say! Be careful about going down the rabbit hole of throwing in an eclectic set of tools and defense mechanisms for specific threats. Structure your software according to best practices and you might not even need the specific defenses (e.g, XSS sanitizers).

- If you try to separate security from software development (leaving regular programming to the developers and security to others), you’ll most likely leave security to the end of the job or forget it altogether. Both lead to disaster:

- If you leave it until later, pentesters will find something and you’ll not be able to deploy on time. That is, if they are able to find anything at all, since they can do little more than just throw whatever they can at it (they didn’t write the source code, so they don’t know it as well as you). They’re human too, and they might miss things.

- If you skip security, you will get hacked and get destroyed

Defining Security Requirements

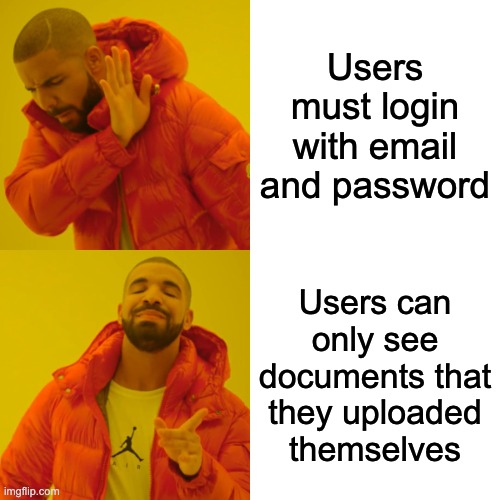

Sometimes requirements definition is hard. It is a learned skill to understand which are the actual requirements and which are just technical details. You can learn it, though. Which of these sounds correct as a requirement?

That was easy, right? Here’s another way to look at this issue: Don’t get too caught up in the fancy crypto and fancy math and digests and hashing at the expense of forgetting some basics (like not authenticating each endpoint in a web service).

Story Time

There was this web app where users had to login to get to pages in the app, and these pages linked to all sorts of images and other assets, which, you guessed it, just used plain oldimgelements. So ... (story continues in class)

To check that the requirements have been satisfied, your development process should included simulated attacks (penetration testing), fuzz testing, and maybe even correctness proofs.

Defending in Depth and in Breadth

The idea of “defense in depth” is known in circles outside software security. Here’s the idea, from the Viega and McGraw book:

The idea behind defense in depth is to manage risk with diverse defensive strategies, so that if one layer of defense turns out to be inadequate, another layer of defense will hopefully prevent a full breach ... Security cameras alone are a deterrent for some. But if people don't care about the cameras, then a security guard is there to physically defend the bank with a gun. Two security guards provide even more protection. But if both security guards get shot by masked bandits, then at least there's still a wall of bulletproof glass and electronically locked doors to protect the tellers from the robbers. Of course if the robbers happen to kick in the doors, or guess the code for the door, at least they can only get at the teller registers, since we have a vault protecting the really valuable stuff. Hopefully, the vault is protected by several locks, and cannot be opened without two individuals who are rarely at the bank at the same time. And as for the teller registers, they can be protected by having dye-emitting bills stored at the bottom, for distribution during a robbery.

Layers in information security will include firewalls, anti-virus software, crypto, authentication mechanisms, authorization rules, signatures, and maybe even correctness proofs. Some of the defenses you will write yourself; others will come from libraries. If anything attacks do get though, you should have intrusion detection and forensic tools to help contain the breach and make repairs.

While depth defense is concerned with putting up a series of defenses that an attacker needs to break through, breadth defense understands an attack can go after many parts of a system. Fore example, think of ways can a denial of service, or a restriction in availability occur? This list can get you started:

- Insufficient network bandwidth

- The hard drives filling up

- Excessive memory paging or cache invalidations

- Hash collisions

- Deadlocks

- Livelocks

- Bad database queries that don’t use indexes

- Slow algorithms (e.g., worst case Quicksort is Θ(n²))

Now, how many of these come from a poor architecture? Poor coding? Attacker knowledge? They might come from all three, so we need to guard against these at multiple levels. Profile the code. Fuzz test!

Principles

Part of the mindset of doing security involves living a few basic principles that will become self-evident to you over time. These include:

- ✅ Set Trust Boundaries

- Protect resources with a series of gates, e.g., “this resource (database entity, API endpoint, private subsystem, etc.) is gated by X.” Gates include things like “only logged in users,” or “users with a particular permission” or “packets originating from a given IP range.” You can set up zones, too, and distinguish, say code on-the-edge (user-facing, untrusted) from code on-the-inside (data you control and can trust).

- ✅ Design for Least Privilege

- Give every user, subsystem, object, function, the absolute least privilege (permission) necessary to do its job. Always make secure the default, and open up little by little explicitly as needed.

- ✅ Maintain Integrity

- Restrict the domain of entities, e.g., a plain old int or string is often bad. Have preconditions, postconditions, and invariants enforced in the code. Treat every piece of input as a threat.

- ✅ Fail Fast

- Identify problems right away! Don’t let inconsistencies worm their way through the data. If something can’t be done, don’t do it. If you crash in the middle of an operation, clean up!

- ✅ Audit

- Log everything that happens, but don’t log any secrets. Also keep the logs secure!

- ✅ Don’t Rely on Secrets

- The more secrets you have, the more likely they will leak or be guessed. People might even divulge them (accidentally, maliciously, or because they are physically threatened).

- ✅ Keep It Simple

- Complexity introduces more possibility for errors and makes it hard to reason about security. All added bits of complexity introduces new attack vectors. The more inputs you have, the greater the attack surface. The more complex of inputs you allow (e.g., markup or formatted text or documents), the greater the attack surface. Attackers love going after your inputs.

- ✅ Prevent Leaks

- Don't leak error information. No PHP or SQL dumps on a user-visible error pages. Don’t let error information allow attackers to guess. Never say “Incorrect password” since that might imply the attacker guessed a user name. Prefer errors like “Not found” for permission errors (since an attacker might be trying to guess user names or resource ids).

Did you notice

None of these principles mentioned specific attacks like XSS or DDoS or Billion Laughs or SQL Injection.

That’s the point.

- The Principles of Security By Design, by OWASP, a very respected organization

- Four Principles of Secure Software Design, by Synopsys

- Seven Application Security Principles, by CPrime

Tactics

Getting impatient wth all this high-level stuff? Wondering what to do in practice? Hang on, we’ll get there. In the meantime, here’s a list of some lower-level strategies and tactics:

- Validate all the inputs (types, bounds, origins, structure, meaning)

- Sanitize all the inputs (“input,” whether code, pattern, or markup, should never be “executable”)

- Favor immutability!

- If not immutable, make defensive copies when data comes in

- If not immutable, copy when sending data out

- Capture constraints in the domain classes (don’t rely on utility functions to do it)

- Don't duplicate code

- Understand the code you are copy-pasting from StackOverflow

- Maximize cohesiveness and minimize coupling

- Minimize your ifs and loops

- Create each operation to do one thing and do it well

- Make inputs smaller

- Clean up resources (e.g., in a finally clause)

- Clear out memory! (to avoid Heartbleed issue, but also O.S. hibernation that may write memory to disk)

- Don’t let resources get exhausted

- Don’t write sensitive information to logs

- Maintain access controls on log files

- Don’t overflow the logs

- Maintain invariants

- Avoid global variables

- Avoid side effects

- Make all readers idempotent

- Reads should just be reads, never write anything as a side effect (e.g., HTTP GET)

- Know what you are doing if you write concurrent code

- Prefer messaging to shared data (because locks are hard and error-prone)

- Explicitly mark references (to the extent your language allows it)

- Understand everything about character sets and character encoding

- Watch out for modular arithmetic wraparound

- Do bounds checking

- Check pointer dereference operations (don’t dereference nulls)

- Don’t double-free pointers

- If your language has unsafe or metaprogramming features (reflection, loaders, serializers), use sparingly if at all

- Treat responses from native code (e.g., Python, Lua, and Java can all wrap C) as external untrusted input

- Don’t rely on case-sensitivity of file names

- Know what is in your config files, and by all means encrypt these

See this amazing OWASP Secure Coding Practices Quick Reference Guide. It has a great checklist.

How about details? Where can we find examples of good (compliant with security guidelines) and bad (non-compliant) code? Next section!

Guidelines and Standards

It’s good to familiarize yourself with publications made by the pros. These can be (1) collections of known vulnerabilities and weakness, or (2) guidelines and coding standards that you follow so that the code you write is secure. Some are language-specific and some are pretty general. Here are some good ones:

- CWE: List of common weaknesses, together with some source-code mitigations you can employ

- CVE: Catalog of publicly disclosed security vulnerabilities

- CERT Coding Standards main page

- CERT C Coding Standard from SEI. Contains guidelines for writing secure C. Each guideline has examples of non-compliant and compliant code

- CERT C++ Coding Standard from SEI. Contains guidelines for writing secure C++. Each guideline has examples of non-compliant and compliant code

- CERT Coding Standard for Java

- MISRA guidelines for C and C++. Best practice guidelines, emphasizing those for embedded and safety-related systems

Many organizations provides summaries of many of these sources, which can be nice to browse before diving into the dense publications themselves. For example, Perforce has useful summaries and overviews of Software security standards in general, The CWE, The CVE, OWASP, CERT C, ISO 26262, and MISRA C and C++.

Learning Software Security

Here are a few places to learn about Software Security as a discipline:

- Presentations:

- Software Security slides from University of Colorado (121 slides)

- Short presentation on securing the SDLC (33 slides)

- Courses:

- Coursera

- JavaScript Security Specialization (by Vladimir de Turckheim). Consists of four courses.

- Secure Coding Practices Specialization (from UC Davis). Consists of four courses: Principles of Secure Coding, Identifying Security Vulnerabilities, Identifying Security Vulnerabilities in C/C++Programming, and Exploiting and Securing Vulnerabilities in Java Applications.

- Writing Secure Code in C++ Specialization (by InfoSec). Consists of four courses: Introduction to C++, C++ Lab Content, C++ Interacting with the World and Error Handling, and C++ Superpowers and More.

- LinkedIn Learning

- Linux Foundation

- Michael Hicks

- Software Security On-line Course AWESOME

- Troy Hunt

- Web Security Fundamentals Course presented by Troy Hunt (Web-specific but awesome)

- TryHackMe

- Hacker101

- Coursera

- Books:

- Secure by Design (a great recent book)

- Software Security: Building Security In, by Gary McGraw (a 2006 book considered a classic)

- Learn C the Hard Way, specific to the C language but relevant to security

- Online Guides:

- Apple’s Software Security Overview

- Apple’s Secure Coding Guide

- OWASP Security by Design Principles

- OWASP Developer Guide RECOMMENDED

- Articles and Papers:

- Learning by Doing: Capture the Flag

- PicoCTF

- HackTheBox CTF

- HackThisSite

- Pwnable.kr

- Also see this big list of CTFs at ctftime.org

Learning by Doing is CrucialTherefore you are expected to create an account on a CTF site and capture a few dozen flags throughout this course.

Although designed for high school students, PicoCTF is just fine! After signing up and becoming acquainted with the site, head over the playlists page and work through the playlist called “The Beginner's Guide to the picoGym”.

You should also ready the entirety of The CTF Primer.

Recall Practice

Here are some questions useful for your spaced repetition learning. Many of the answers are not found on this page. Some will have popped up in lecture. Others will require you to do your own research.

- How does software security relate to computer (cyber) security? Software security is a subset of computer security.

- What kinds of things are computer security but not software security? access control lists, permission matrices, antivirus software, firewalls, whitelists, blacklists, and security zones.

- What percentage of reported security incidents result from exploits against defects in the design or code of software? 90%.

- What are the phases in the software development lifecycle (SDLC)? Ideation • Planning • Requirements Analysis and Definition • Architecture and Design • Implementation • Static Analysis and Code Reviews • Testing • Documentation • Integration • Deployment • Maintenance • Evaluation • Retirement • Disposal.

- What are the two broad areas of software security? (1) Low-level security, concerned with memory attacks, and (2) system security, concerned with large-scale, multi-user applications like webapps.

- What are the three pillars of the software security mindset? Build security in. Define security requirements properly. Defend deeply and broadly.

- What does it mean to “build security in”? It means to design and implement the core domain objects and core business logic to prevent exploits, rather than leaving security concerns to separate libraries with ad-hoc solutions.

- “Secure software is better than ________________ software.” Security.

- Why might you not even need specific security solutions like, say, an XSS Sanitizer? If you define your domain objects to be restricted to certain character patterns, XSS attacks can be completely avoided.

- What might happen if you don’t build security in, and you give the system to the security experts and pen testers after you finish development? The pentesters will find a ton of problems and tell you not to release the project without a massive overhaul.

- What might happen if you don’t build security in, and you end up just deploying the system as-is? You will get hacked and destroyed.

- Secure software development is not really about ethical hacking and penetration testing, but rather about ________________. Disciplined software design and development.

- What are some coding constructs that increase security? Immutability, encapsulation, error isolation, validation.

- What are techniques for

making sureimproving your confidence that your code is secure?Manual code reviews and Linters. - What kind of problems do people make when defining security requirements? They sometimes mistake a technique or use case for a bigger concern, e.g., saying a login page is a requirement, when the actual requirement is not to divulge information to the wrong user.

- Give an example of an exploit stemming from an improperly defined security requirement. A requirement that says “users must log in to access a page with links to their photos” says nothing about authenticating the actual service that fetches the photos, so an attacker might easily guess the URLs of anyone’s photos.

- What is the meaning of “defense in depth”? Having a series of defenses so that if an attack isn't caught by one, it will probably be caught by the next one on the chain, and so on.

- What some examples of layered protections that would appear in depth defense? Firewalls, anti-virus software, crypto, authentication mechanisms, authorization rules, signatures, correctness proofs.

- What is an example of the need to apply defenses broadly, as well as in depth? An available attack can exploit flaws stemming from, say, insufficient network bandwidth, filling up hard drives, excessive memory paging or cache invalidations, hash collisions, deadlocks livelocks, bad database queries that don’t use indexes, or slow algorithms.

- What are the fancy terms for (1) trusted code in your security zone whose input you can trust, and (2) code from untrusted zones? (1) Code-on-the-inside, (2) Code-on-the-edge.

- What is the concept of designing for least privilege? Having the default situation being that any user or process is able to do the minimum possible to carry out its task, and no more.

- What do we define to help maintain integrity? Preconditions, postconditions, and invariants.

- Why do we have to fail fast? An unhandled failure can propagate an inconsistency in state leading to horrifying situations down the line.

- Auditing is important for security, especially for forensics and intrusion detection, but we have to be careful when logging. What are the two main concerns? Never log secrets, and keep the logs themselves secure.

- Why should you not rely on secrets? They can be accidentally or maliciously leaked, or divulged by a person who is under threat.

- Why should code be kept simple? The more complex your code, the greater the attack surface, and the greater chance for the introduction of bugs and flaws.

- Why should you be somewhat coy when reporting errors to users? The user may be an attacker looking for opportunities so being too-specific about error messages (e.g., distinguishing not found and found-but-you-don’t-have-access, or distinguishing bad-password from unknown-username-OR-password) may leak useful information to an attacker.

- What is the difference between security principles and tactics? Principles are high level, like “defend in depth, ”fail fast,” “don’t rely on secrets”, and “prevent leaks”. Tactics are specific programming practices like “don’t double-free pointers” or “avoid global variables.”

- What are some organizations that publish guidelines and standards for secure software development? CERT, OWASP.

- What is Capture-The-Flag (CTF) in security? A exercise or game where you try to find and exploit vulnerabilities in a system.

- Why should you take part in CTF exercises or competitions? To learn by doing.

Summary

We’ve covered:

- Why secure software matters

- Building security in

- Defining security requirements

- Defending deeply and broadly

- General principles

- Specific tactics

- Important guidelines and standards to know about

- A few good resources